What are your responsibilities as a data scientist?¶

What industries would you work in? Where would you refuse to work?

What applications would you refuse to work on?

When are you responsible for what a system you built is used for?

Predictive advertising¶

https://www.nytimes.com/2012/02/19/magazine/shopping-habits.html

Target worked out how to identify 2nd trimester pregnant women - to target them for baby-related advertising.

A year after it was put in use, a father walked into a Target store, furious that his high school daughter had received a circular with baby-related coupons and deals.

It emerged that his daughter was pregnant - a fact that her purchasing habits revealed before her family learned of the pregnancy.

Garbage In, Garbage Out¶

On two occasions I have been asked, 'Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?' I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question.

Big Data Methods amplify bias in the data.

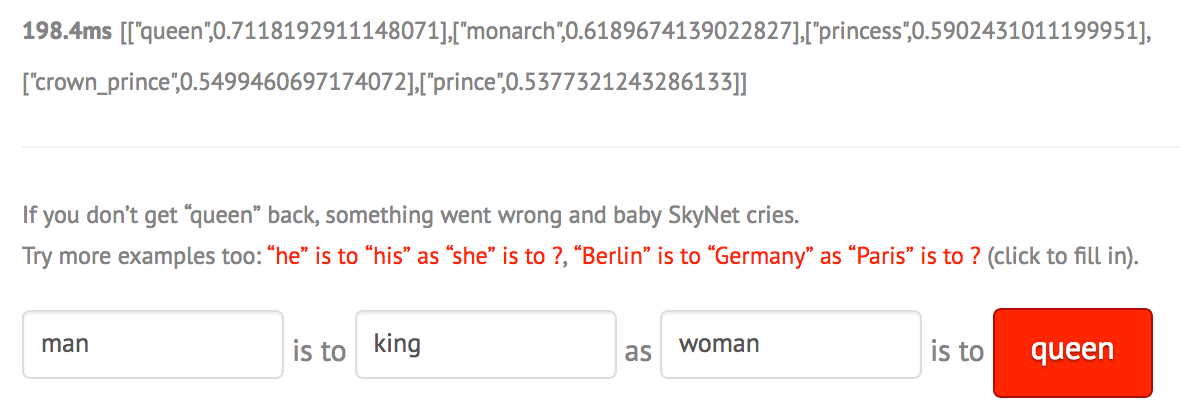

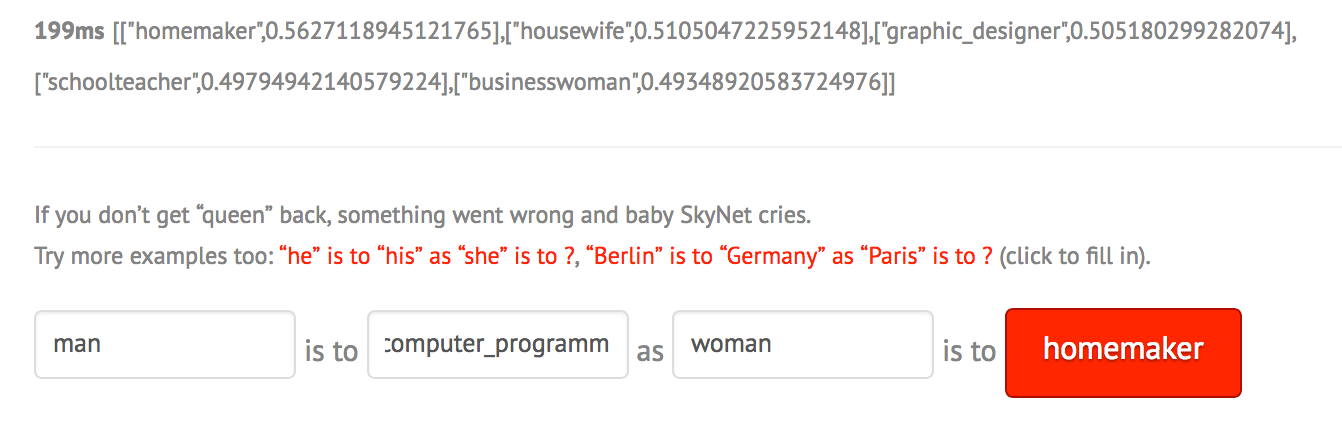

Consider word2vec. Trained on Google News dataset.

Consider word2vec. Trained on Google News dataset.

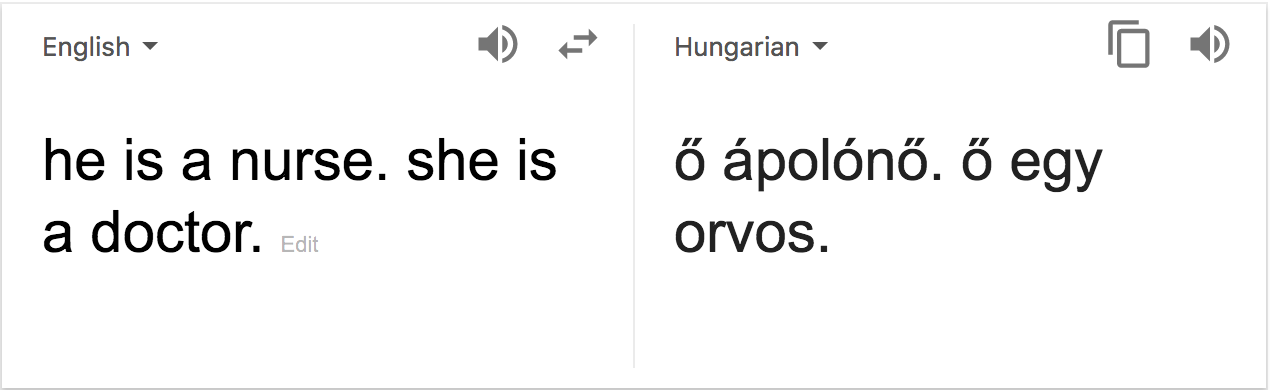

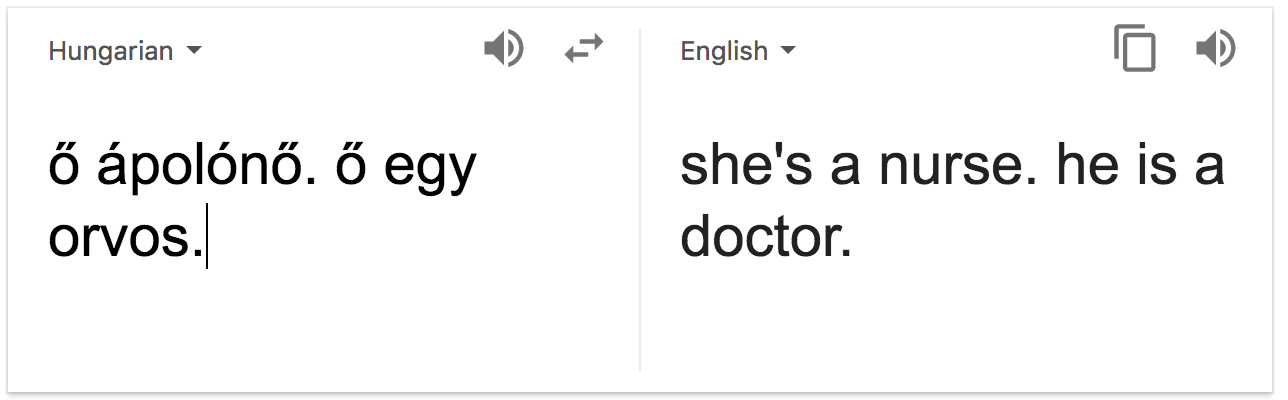

Google Translate, using word2vec and similar techniques.

Predictive Sentencing¶

https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) is used by US courst to decide on bails and prison sentences by predicting likelihood to reoffend.

ProPublica analyzed the methods and found disparitiez in risk assessments.

Predictive Policing¶

Efforts in place to use data science to direct policing resources: send more police patrols to areas with historically higher crime.

How do we measure higher crime?

Predictive Policing¶

Efforts in place to use data science to direct policing resources: send more police patrols to areas with historically higher crime.

How do we measure higher crime?

Higher rate of police interventions - even higher rate of police interventions that lead to convictions - might not be an accurate measure; these rates correlate with historical distribution of police patroling.

This starts a feedback loop: more police patrols → more police interventions → more skew for predictive policing models → more police patrols.

Big Data means precise data¶

With big and comprehensive enough data sets, we can generate very, very fine grained information.

Modern political campaigning uses micro-targeting: keeping files tracking individual voters, and crafting political messages with very precise targeting.

The Obama campaign was notable for this, and Cambridge Analytica became famous when their work on data harvesting for the Trump campaign and for the Brexit leave campaigns became known.

Learning Racism¶

Microsoft released an AI Chat Bot named Tay on March 23 2016. The bot was designed to mimic the language of a late teen american girl, and to learn from interactions with humans on Twitter.

Through a combination of learning from interactions and a "repeat after me" feature, Tay started tweeting racist and sexual messages, and was suspended after 16 hours.

What can we do?¶

Often, the data scientist is not in a position to decide whether or not to use data driven methods or algorithms for a task.

With biased data there is an inherent tension between

- Accuracy with respect to the available data

- Fairness and decreased bias in application

One task for a responsible data scientist is to accurately inform the end user of the balance that a particular model strikes and the range of behaviors possible.

What can we do?¶

https://www.nottingham.ac.uk/bioethics/ethical-matrix.html

https://www.kdnuggets.com/2018/07/domino-data-science-leaders-summit-highlights.html

Cathy O'Brien proposes an ethical matrix: collecting stakeholders (everyone who is influenced) and properties into a matrix, labeling it with markers for severity of concerns.

Hippocratic Oath¶

Emanuel Derman and Paul WIlmott suggest a Hippocratic Oath for data scientists:

- I will remember that I didn't make the world, and it doesn't satisfy my equations.

- Though I will use models boldly to estimate value, I will not be overly impressed by mathematics.

- I will never sacrifice reality for elegance without explaining why I have done so.

- Nor will I give the people who use my model false comfort about its accuracy. Instead, I will make explicit its assumptions and oversights.

- I understand that my work may have enormous effects on society and the economy, many of them beyond my comprehension.