Lecture 8: Text Data¶

What is text?¶

- A character is one or several bytes, understood in the context of a text encoding.

- A word is one or several characters, in a linear order.

- A sentence is one or several words, in a linear order.

- A document is one or several sentences, in a linear order.

- A corpus is one or several documents.

Far more subtle details exist, and are important.

From bytes to text¶

As we start with a text data point, we have a sequence of bytes (or Unicode codepoints...). From these, we need to extract a useful representation of the content.

Tokenizer¶

A tokenizer takes a byte stream and divides it up into distinct component. Something like:

Hold infinity in the palm of your hand.

transforms into:

| Hold | [space] | infinity | [space] | in | [space] |

|---|---|---|---|---|---|

| the | [space] | palm | [space] | of | [space] |

| your | [space] | hand | [period] |

From bytes to text¶

As we start with a text data point, we have a sequence of bytes (or Unicode codepoints...). From these, we need to extract a useful representation of the content.

Tokenizer¶

In some cases, the tokens are smaller units than space-or-punctuation-separated-character-sequences. How many tokens should be generated for either of:

| 25¢ | O'Malley | 5th | 250mi |

|---|---|---|---|

| Professor | Professors | Professor's | Professors' |

When venturing outside English, far more gnarly features show up in different languages.

From bytes to text¶

As we start with a text data point, we have a sequence of bytes (or Unicode codepoints...). From these, we need to extract a useful representation of the content.

Tagging¶

Tokens are largely a syntactic subdivision of the text. For more semantic content, one common step is to tag the tokens. By tagging, each token is equipped with information about its role in a sentence or its meaning.

Parsing¶

Especially when used in translation or natural language controlled systems, the overall structure of the text needs to be recovered. This is the role of parsing - create a data structure that captures subdivisions into phrases, contexts and dependencies.

From Text to Feature Vectors¶

Most machine learning and data analytics systems expect, sooner or later, tidy data: some sort of numeric matrix with observations for each row and features for each column.

Somehow we need to take a sequence of (tagged) tokens and produce a fixed-size vector to process.

Dictionary¶

A standard first step in this process is to extract a list of all occurring words, and order them. The feature vectors will then use the position in this dictionary to describe a token.

From Text to Feature Vectors¶

Most machine learning and data analytics systems expect, sooner or later, tidy data: some sort of numeric matrix with observations for each row and features for each column.

Somehow we need to take a sequence of (tagged) tokens and produce a fixed-size vector to process.

Stop words¶

Some words are so very common that they will overwhelm any system. For instance, each slide up until now has featured the words

a of or the

It is common practice to filter out these words.

Stemming¶

The issue with the professor, professors, professor's, professors' example can be dealt with either by splitting into morpheme tokens, or by stemming - removing grammatical markers from words.

From Text to Feature Vectors¶

Most machine learning and data analytics systems expect, sooner or later, tidy data: some sort of numeric matrix with observations for each row and features for each column.

Somehow we need to take a sequence of (tagged) tokens and produce a fixed-size vector to process.

Bag of words¶

A first step is to use an incidence vector: for each document, put a 1 in the feature vector if that word occurs in the document; a 0 if it does not.

Word counts¶

More flexible is to use a count of words for each document. This weights a word stronger if it is used often than if it shows up sporadically.

Term frequency¶

The word counts and bag of words fit in a generic scheme for measuring term frequency weighting:

| Name | Weighting |

|---|---|

| binary | 0/1 |

| raw count | number of occurrences |

| term frequency | raw count / document length |

| log normalized | log(1 + raw count) |

| K normalized | K + (1-K)(raw count / highest raw count) |

Inverse Document Frequency¶

Inverse document frequency measures how much information the word provides - if it is rare or common across all documents. Given a corpus with $N$ documents, and writing $n_t$ for the number of documents that contain the term $t$, some common measures are

| Name | Weighting |

|---|---|

| unary | 1 (ie no weighting at all) |

| idf | $\log(N/n_t) = -\log(n_t/N)$ |

| smooth idf | $\log(N/(1+n_t))$ |

| max idf | $\log[(\max_{t'} n_{t'}) / (1+n_t)]$ |

| probabilistic idf | $\log (N-n_t)/n_t$ |

TF-IDF¶

Term frequency - inverse document frequency is an adjustment of the counting strategy that emphasizes high term frequency, low document frequency terms as more semantically meaningful: a term that is very common when it occurs, but rarely does occur.

$$ tfidf(t, d, D) = tf(t,d) \cdot idf(t,D) $$Comparing word vectors¶

For many of the term frequency measures, the values will increase if the size of the document increases. One particularly common metric to use is cosine similarity - a normalized dot product:

$$ CS(X,Y) = \frac{X\cdot Y}{\|X\|\cdot\|Y\|} $$This measures the (high dimensional) angle between the two vectors - disregarding the magnitude of the vector.

Densifying¶

Word vectors are very, very sparse in most applications. Several transformations exist that make each dimension in the vector more meaningful.

Most dimensionality reduction methods could work - but one method is particularly popular lately:

word2vec¶

One word embedding scheme with impressive additive properties (adding / subtracting word vectors maps to semantically meaningful relationships) is word2vec.

The fundamental idea with word2vec is to train a neural network with one hidden layer to predict a word from its context (Continuous Bag of Words / CBOW) or a context from a word (Skip-grams) - and then use the hidden layer activations as a vector representative of the word.

Trained and ready to use word2vec networks are available for download.

Discriminative and Generative Models¶

A discriminative model is one that provides conditional probabilities (or non-probabilistic statements) - and thus allows us to tell the difference between objects, but not to generate new examples of objects.

A generative model is one that produces a joint probability distribution - from which we can sample to generate new objects.

Probabilistic Graphical Models¶

One particular type of generative model that I have seen very often in machine learning contexts are the Probabilistic Graphical Models (PGMs).

Given a directed graph $\Gamma=(V,E)$, a PGM on that graph is a probabilistic model that encodes the joint probability as a product of probabilities of the nodes conditioned on their directed parents:

$$ p(x) = \prod_{v\in V} p(x_v | \{x_s : \forall (x_s, x_v)\in E\}) $$Software supporting these include JAGS, OpenBUGS, Stan.

Plate Notation¶

Graphical Models can end up very messy to draw out. Significant simplification is available through plate notation:

Each random variable is a circle. Observable random variables are filled in, latent variables are clear.

Each graphical relation is a directed edge.

Indexed collections of related entities are enclosed in a square (the plate) which is annotated by its indexing set.

pgm.render()

<matplotlib.axes._axes.Axes at 0x122ee8278>

With the right choices, this models a bayesian linear regression. To read this:

- $\mu$ and $\sigma^2$ are global static values

- $\beta$ is a global random value determined through $\mu$ and $\sigma^2$

- $\epsilon$ is a global random value

- $y_i$ are indexed observed (shaded circle)

- $\mathbf{x_i}$ are indexed static values

- Everything inside the plate occurs in separate copies for indices $i\in1:N$

The complete graph encodes a joint probability of all the $\epsilon$, $\beta$, $y_i$ where each arrow is a conditional probability:

$$ \mathbb{P}(y_{1:N}) = \mathbb{P}(\epsilon) \mathbb{P}(\beta|\mu, \sigma^2) \prod_{i\in1:N}\mathbb{P}(y_i | \epsilon, \beta, x_i) $$Latent Dirichlet Allocation¶

Given

- Corpus of $D$ documents

- Each document a sequence of $N_d$ words

- $K$ posited topics

Latent Dirichlet Allocation is a model:

lda.render()

<matplotlib.axes._axes.Axes at 0x1166a22b0>

lda.render()

<matplotlib.axes._axes.Axes at 0x1166a22b0>

- For each topic $k$, $\beta_k$ are Dirichlet distributed with parameter $\eta$. This models the probability of a word occurring in this topic.

- For each document $d$, $\theta_d$ are Dirichlet distributed with parameter $\alpha$. This models the distribution of topics in the document.

- For each word $n$ in document $d$, draw topic $z_{d,n}\sim\text{Multinomial}(\theta_d)$ from its multinomial distribution, then draw observer word $w_{d,n}\sim\text{Multinomial}(\beta_{z_{d,n}})$

Fitting an LDA model uses variational calculus or Gibbs sampling to estimate the parameters.

Transforming with an LDA model produces document topic distributions given a document/word matrix.

So were can we get text data¶

Social Media APIs¶

Msny social media platforms offer REST APIs for access, often with libraries provided for major programming languages.

- Twitter Streaming API

- Pick keywords, usernames, locations, etc to sample from

- Sample of tweets

- Between 1% and 40% of the relevant twitter stream

- Twitter Firehose

- Pick keywords, usernames, locations, etc to sample from

- 100% delivery

- Work through one of two providers: GNIP and DataSift

- Reddit Data dumps

- See Blackboard for links to BigQuery datasets

Corpus collections¶

- https://www.english-corpora.org

- Google Books

- Google n-grams

On corpora¶

Words get their meaning from their use and their contexts. Meaning comes from use, not etymology, not prejudice.

Corpora provide text samples, often tagged with additional linguistic information.

Some corpora (COCA, BNC) are balanced: contain text samples from different sources - spoken; fiction; non-fiction; news; …

Text analysis can not provide negative evidence. Large text samples always have patterns - enter with an articulated research question.

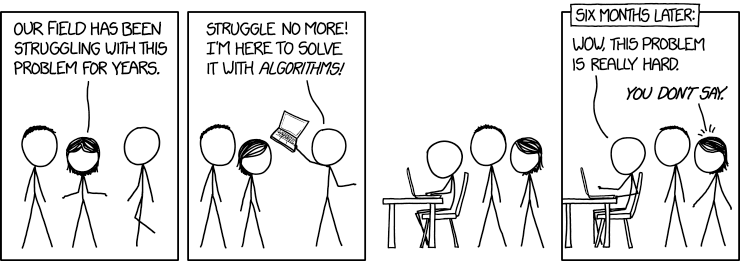

Collaborate with a linguist!!!¶

"We TOLD you it was hard." "Yeah, but now that I'VE tried, we KNOW it's hard."