Internal States as Encoding¶

Since each subsequent layer of a neural network is a higher level abstraction, they can be used as semantic encodings.

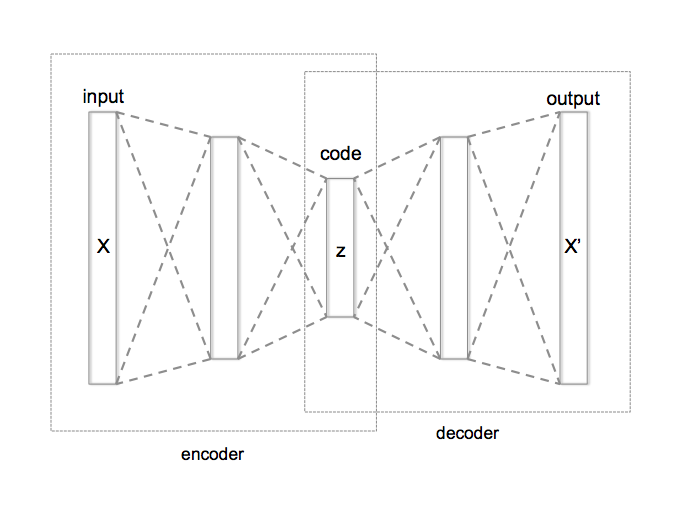

word2vectrains a network to predict the next word in a text from context.- Autoencoders train a pair of networks to predict the input.

word2vec¶

Train a network to fill in the blank in a sentence

The _ wears a crown.

When trained on a large corpus, the last hidden layer has proven useful as a word embedding. This construction produces nice algebraic properties

- king - man + woman → queen

- Munich - Germany + France → Paris

Only as good as its own corpus.

- computer programmer - man + woman → housewife

Autoencoders¶

Autoencoders¶

- Dimensionality reduction: set $z$ to a lower dimension than $x$

- Generative model: feed in new combinations into the decoder part

- Denoising: train with corrupted input data to train the network to remove noise